Part

01

of one

Part

01

Growth Experiments

The growth experiments used by seven companies, Microsoft, Amazon, Adore Me, Molson Coors, MightyHandle, Hubspot, and Humana, are presented below. The companies represent a range of different product types (including one software company) to provide a broad range of examples from different industries. The experimentation undertaken by these companies includes website design, product recommendations, online store photography, product design, and marketing strategy. It is noted that some of these examples may be used to develop case studies in due course. Given this, the availability of additional information in the public domain has been noted for each example to assist in this process.

Microsoft — Bing Search Engine Headline Variation

- Back in 2012, a Microsoft employee had an idea regarding a variation to the way headings were presented by the Bing search engine. Testing was needed to evaluate its viability and impact. Unfortunately, due to time pressures and a multitude of other ideas, the idea went nowhere for several months, until one of the engineers recognized that the code required for a simple online experiment could be written relatively quickly for only a small cost.

- An A/B online experiment was put in place. The test was simple, comparing the results of a control group (A) using the current Bing headline format to an experiment group (B) using the proposed headline variation. Users were randomly assigned to either group A or group B when using the Bing search engine. Key metrics regarding the use of each group were then evaluated to assess the results.

- A built-in alert, the "too good to be true trigger," was included in the testing protocol. It was designed to send an alert if the results appeared to be overly skewed toward one group so that engineers could assess whether a bug in the test program was responsible for the results being seen. After just a few hours, an alert was triggered.

- When reviewed, the results were not caused by a bug; what they revealed was truly astounding. The headline variation had resulted in a 12% increase in revenue, and notably, the variation had no impact on the user experience. When implemented, this variation would result in a growth in revenue of $100 million in the US alone. Needless to say, the variation soon became the new Bing norm. The growth experiment has become the highest revenue growth experiment in Microsoft history.

- Microsoft has a long and well-documented history of growth experiments, making it an ideal candidate for a future case study. Microsoft has developed its own experimental platform, where there are currently more than 15 product groups within the Microsoft family running growth experiments, including Bing, Edge, Exchange, Identity, MSN, Office client, Office online, Photos, Skype, Speech, Store, Teams, Windows, and X box.

Amazon — Product Recommendations

- Jeff Bezos, the CEO of Amazon, has said, when discussing Amazon's success, "Our success at Amazon is a function of how many experiments we do per year, per month, per week, per day."

- Many of Amazon's experiments are A/B experiments relating to product placement optimization. One of the experiments Amazon ran explored the use of product recommendations and the effect a recommendation has on consumer buying behavior.

- Amazon used two different product pages to test the impact of recommendations on buyer behavior. The first product page contained no recommendation.

- The variation was the inclusion of a product recommendation following the search results.

- Amazon then analyzed the impact of the recommendation on consumer buying behavior to determine if it resulted in more consumers purchasing the recommended product. Unfortunately, Amazon does not typically release its growth experimentation testing results, so the results are unknown.

- There is a significant amount of information available on the types and results of growth experiments completed by Amazon, which would make it a viable option to be explored in a future case study. Their latest experiment, which started late August 2020, uses "Dash Cart," an innovative shopping cart that allows users in its grocery stores to checkout without a cashier, among other things.

Adore Me — Variations in Product Photographs

- Lingerie company, Adore Me has learned a lot about consumer buying behaviors based on its online experimentation. As part of their ongoing growth strategy, Adore Me is continuously looking for the optimal way to present its lingerie products to the consumer to optimize its revenue potential. It uses its online store to test different model photographs to determine the styles, model type, poses, and props most likely to entice the consumer to make a purchase.

- When consumers visit Adore Me's online store, they will see one of two photograph variations of models wearing a particular product. The company then assesses the consumer response to each photograph variation to determine which variation creates higher sales revenue. This information is then used to tweak other product photos, reflecting the same elements as the tested photograph. The photograph variations include props, model hair color, model popularity, and posing positions.

- The insights Adore Me has discovered through this experimentation are fascinating "Sex doesn't sell, so forget the boudoir shot. Blondes don't work. Props distract. Couches are fine. Playing with hair is ideal. Often the variation that results in better sales is subtle or minor. For example, "a model with her hand on her hip does not sell the lingerie as well as that same model with her hand on her head." A campaign using plus sized models resulted in four times as many sales as the same product on a skinny blonde.

- The experimentation meant that Adore Me was able to identify seeing the product on a model the consumer likes or identifies with is more likely to result in a sale than a price cut. This testing has proved removing the random elements of an initiative can result in a competitive advantage. The results saw Adore Me's growth rate hit 15,606% in 2014, making it the second-fastest-growing company in NY Inc. 5000

- Most of the information available regarding Adore Me's growth experimentation relates to sales optimization through experiments of this nature. There is little information regarding the company's experimentation beyond this.

MightyHandle — New Handle concept

- MightyHandle came to the conclusion that a new type of shopping bag handle may be required for the "suburban Mom" market, than that which had been successful among its traditional market of "twenty-something city dwellers. Determining the type of handle that worked best for the "suburban Mom" was key to its future growth, with the company having secured a "test window" at Walmart.

- The company realized that social media channels such as Facebook were key to determining the handle that worked best for different groups. A range of "different creative concepts: images, taglines, and combinations of both that presented the target demographic with different uses for the handle" were used in a Facebook marketing campaign" to identify the handle that best met the demands of the target population. Some of the concepts tested are illustrated below.

- Using the comments and feedback of users, MightyHandle developed what they considered the winning design, which they tested against the more traditional shopping bag in store at Walmart. The feedback from customers was then evaluated.

- The results were heavily skewed in favor of MightyHandle´s new product. In fact, the results were so convincing Walmart was prompted to sign a deal with MightyHandle that saw MightHandle´s products rolled out across 3,500 Walmart stores nationwide.

- There is a considerable amount of information available that illustrates the process MightyHandle went through developing both the concept and the test but very little information available on other growth experiments that MightyHandle has undertaken.

Molson Coors — Creative Targeting

- To make their marketing more efficient, Molson Coors was looking to see if a "trigger-based" marketing campaign could be successfully deployed. The company was aware there was a correlation between a hot day and increased happy hour consumption rates.

- Molson Coors tested their hypothesis through a range of Facebook advertisements highlighting one of its cider brands, with a call to action that led them toward a local happy hour. It was tested by specific demographic groups in Winnipeg, Vancouver, Toronto, and Halifax.

- Different weather-related advertisements were developed, with taglines like "Hot out there, Toronto?" Similarly, different advertisements without the weather-related content were also developed, with taglines like "What time is it? It's Beer o'clock." When the temperature reached 23 degrees or higher, the real time advertisements were run on Facebook.

- The results were conclusive, with the weather-related advertisements exceeding the control advertisements' performance by 89% based on click-through rates. Those advertisements also saw a 33% increase in the number of comments left. This data provided valuable data to leverage in the ongoing development of Molson Coors' marketing strategy.

- This was the only case of Molson Coors growth experimentation. This was the only data available on the experiment. Stella Artois ran a similar weather-related experiment using billboards with similar results.

Hubspot — Mobile Call to Action

- Appropriate calls to action (CTA) in a marketing plan are key to a company's growth. On its blog posts, Hubspot uses a range of different calls to action, including anchor text, slide-in CTAs, and graphic CTAs. Hubspot was concerned that the CTA on its website could become intrusive if viewed on a mobile device.

- They wanted to test the graphic bar CTA that appeared at the bottom of their blog post, using thank you page views, and CTA clicks as the measurement metrics. The CTA remained unchanged for the control group, while in the experiment group, the CTA had a minimize/maximize option that meant users could dismiss it. A third variation gave users the ability to dismiss the CTA altogether, while the final variation did not allow the user to dismiss or minimize the CTA.

- The results provided some interesting results, with the second variation seeing a 7.9% increase, the third an 11.4% decrease, and the fourth a 14.6% increase. Basing its forecasts on those figures, Hubspot was able to see that employing the fourth variation on a mobile device would lead to an additional 1,300 click-throughs per month and contribute positively to its ongoing growth.

- There is a considerable number of Hubspot examples employing the use of growth experimentation in its business practice. They relate mainly to A/B type testing. These could be explored in a future case study.

Humana — Site Banners

- One of the key ways a company can induce growth is through additional sales. The website is a key aspect of this strategy. The top portion of the landing page is critical. A site banner is often placed there promoting the company's wares, but caution needs to be taken to ensure that the right message is displayed. Humana, a healthcare insurance provider, decided to test its site banner's effectiveness in driving sales.

- Iterative testing was used to test the new banner. This meant that different aspects of the banner were tested in various stages, with the "winners" ultimately combined to produce the new site banner. A graphical representation of the process is detailed below. Humana referred to the process as the super test.

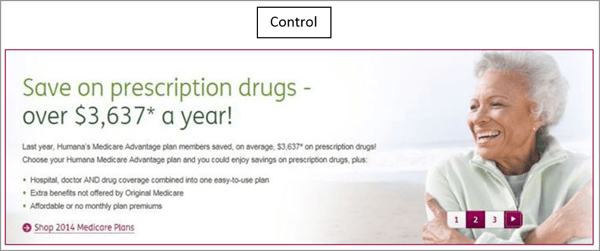

- The control in their first experiment was a standard banner with a reasonable amount of text, somewhat weak CTA, and a direct message.

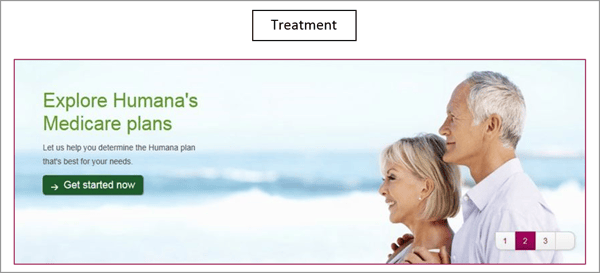

- The test banner contained simplified and concise text and a simple message.

- The result was conclusive. The simplified banner resulted in a 433% increase in click-throughs when compared to the control site banner. Humana then took the experiment a step further, using the preferred banner as a control in a new experiment, which tested the CTA. The CTA was changed to include language that Humana perceived would be a harder sell.

- This experiment yielded further positive results with an increase in the click-throughs from the new CTA of 192%. The Humana experiment illustrates how experiment results can be built upon throughout the design or redesign process.

- There is a reasonable amount of information available discussing Humana´s growth experimentation because it offers a different perspective concerning A/B testing.